The boasting of Dev & network operations has changed. They ask the question “How many servers do you have?” looking for an answer to see if they manage more servers than the other guy. The question has stayed the same, but the response has been turned on its head. Now if you are managing zero servers, you are some genius. This is the birth of Serverless and maybe the end of for physical and virtual servers for all of us (except for those in data centres).

Salesforce’s jump on to the AWS bandwagon

Back in May Salesforce announced that it had selected Amazon AWS as their preferred public cloud infrastructure provider. For me, this event was incredibly exciting as I work in both the Salesforce and AWS worlds (in fact as well as my Salesforce certifications I’m also an AWS Certifed Architect). They said this was to aid their international expansion at the launch, for their core services including Sales, Service, App Cloud, Community Cloud and Analytics cloud. Salesforce isn’t new to AWS; they have Heroku, Marketing Cloud Social Studio and SalesforceIQ all running on AWS (although mostly through acquisition). But the bread and butter of Salesforce is its core Force.com platform that powers the Sales and Service Clouds. This platform is all currently hosted in traditional data centres on their hardware and infrastructure. But now based on their statement, I’m thinking they are looking at moving to Serverless!

Salesforce invented “Platform as a Service.”

In the same way, Salesforce-owned the trademark for “App Store” before giving it to Apple, you also may not know that Salesforce invented the term “Platform as a Service”, they pitched this to Gartner to make sure it stuck in the industry’s mind. Now AWS is an increasingly more powerful player in this space.

Why move to AWS?

In the 90s you needed to know Microsoft or Linux, now if you’re in the biggest enterprises, you need to know AWS. AWS is used for enterprise hosting, websites, virtual servers, big elastic compute and many other things. AWS was initially set up to solve a problem Amazon had. They had 1000s of servers sitting around doing nothing for most of the year and were only switched on for the “Black Friday” deals or Christmas to cope with the demand in traffic. AWS realised the waste and decided to resell this unused compute power to other people. This allowed those customers to spin up and tear down servers at a moment’s notice in a pay by the minute model. But now AWS have gone a step further into the world of a serverless architecture.

Once the Salesforce platform is on AWS how about refactoring elements in the Salesforce stack to auto scale the infrastructure when capacity gets high? How about leveraging the granular pricing within AWS and allow customers to purchase extra CPU time or SOQL/DML limits? Maybe if they start leveraging AWS auto scaling they can scale down instances during the night and potentially shift that compute saving over to peak daylight hours, increasing performance for customers and maybe allowing customers relaxed governor limits based on Salesforce saving a tonne of money?

BUT THIS ISN’T SERVERLESS! You still need to spin up virtual servers and infrastructure in the background when you get peak demand, either automatically based on CPU/load/time of day, or manually. If you are working in architecture with incredibly “peaky loads”, then by the time you have spun up the new servers it may already be too late!

So what is this serverless mumbo-jumbo?

Serverless is still a bit in its infancy, but I’m currently working with a company with quite an impressive web based service with not a single server in sight. They use some the serverless features within AWS to create a 100% serverless architecture. The goal is not to get charged for resources if none of your users are using your solution.

The biggest betting event maybe the Superbowl in the US, but in the UK its the Grand National. I think for those of us that never bet you have probably bet at some point in your life on the Grand National. But forget “Black Friday” where people are making sales over 24hrs, bet on the Grand National and most customers are doing it in a couple of minutes before the race starts. Due to this Sky Bets has incredible “peaky loads”. One minute they could be serving 300,000 request/secs then suddenly 700,000.

The beauty of a serverless architect is the entire architecture can handle 1 request a second or 1million requests a second, from second to second eliminating the time it takes to scale up your hardware… it just works.

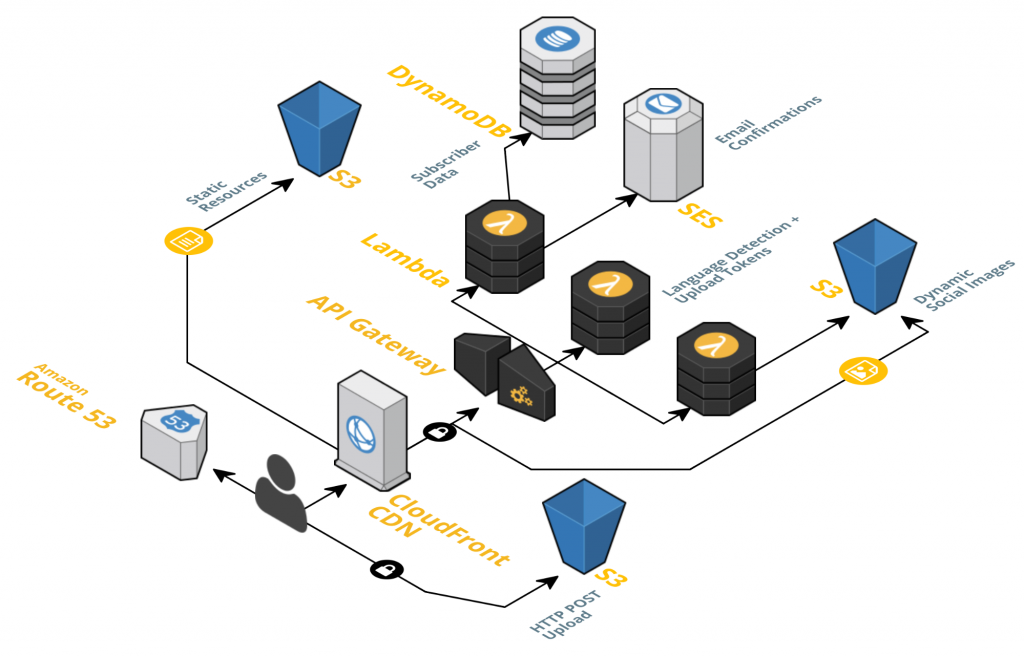

Example of a Serverless architecture

The above example is a typical serviceless architecture in AWS.

- Route 53; Think of this as a highly available & scalable DNS service which also manages traffic flow based on different routeing types e.g., Latency Based Routing, Geo DNS, and Weighted Round Robin as well as DNS failover.

- CloudFront CDN; As it says on the tin, this is a CDN service.

- S3; This is a static file storage which can store petabytes of storage if you want, with 99.999999% durability.

- API Gateway; This is a REST based service which allows you to create, publish, monitor and quickly scale & secure API services.

- Lambda; Think of this as server side code. This is a compute service where you can upload your code and when a request comes in the Lambda code is executed.

- DyanmoDB; This is a very scalable database which guarantees the same consistent speed of read and write requests.

- SES;Â Mass emailing service

The important thing to understand with serverless technology at the moment is the majority of the code is executed on the client and then has Javascript web service calls to the AWS REST API Gateway which is used for specific server side requests. So there isn’t a PHP, Java framework/layer behind the HTML/javascript code. Just a REST API Gateway but this is what makes it so scalable.

So, when a customer hits the serverless architecture, they are presented with the HTML/javascript of the website served by AWS CloudFront and S3 services (this could, for example, be using the ever popular AngularJS framework). If the user logs in to the website their login details/request are sent via a REST call to the API Gateway and the server side code is executed in the lambda function. The lambda function then does whatever is needed and returns the REST result which may mean the navigation to another page which is stored in AWS S3.

The beauty of this is the solution completely scales regardless of the number of requests, and you are only charged (more or less) for just the data transport and execution of the request. Once the request is complete, there is nothing to charge for. Now, in reality, AWS does charge for the storage of the static web content and database storage, but that is minimal compared with the compute required in traditional architectures.

Want to learn more?

I’m going to be at Serverless Conf which is an event dedicated to serverless technologies and learning from the industry experts. The event is on between 26th – 28th October. Check out the website for more info; there is also a similar event in Tokyo, and you can watch the videos from the New York event in May!

More info on ServerlessConf LondonHope to see you there!

Leave a Reply